SYSTEM AND METHOD FOR RAPID VIDEO COMPRESSION

FIELD OF THE INVENTION

The present invention is of a system and method for rapid video compression, and in particular, for such a system and method which is both rapid and which is compatible with a wide variety of computational devices.

BACKGROUND OF THE INVENTION

Streaming video data to computational devices over a network, such as the Internet, or even over a more local network such as a LAN (local area network) or WAN (wide area network), can be difficult due to bandwidth restrictions. Indeed, even the transmission of video data over a network through other, non-streaming, mechanisms can be difficult because of the lack of bandwidth. Therefore, more efficient transmission of video data is clearly desirable. In order to increase the amount of video data which can be transmitted on any given amount of bandwidth, video data is typically transmitted in a compressed format. Compression of video data increases the amount of video data which can be transmitted, but may also increase the amount of time required to process the video data, both before transmission, and upon reception, of the data, before the video data can be played back. Efficient, rapid methods for video compression are thus more useful.

Two standard methods for video compression are MPEG-2 (Motion Picture Expert Group-2) and MPEG-4. These two methods were both developed by the same industry standards group, the Motion Picture Expert Group. However, the two methods differ in both functionality and availability on end-user computational devices (see for example http://www.cselt.it/mpeg/as of April 15, 2001). MPEG-2 is currently used for DVD and digital television set-top boxes. According to this compression method, video data and audio data are divided into two separate streams, each of which is compressed separately according to the most efficient compression method for that type of data. The separate data streams must be recombined before being played back on an end-user device, such that the MPEG-2 standard includes mechanisms for ensuring that the video data and the audio data are synchronized.

MPEG-2 has some advantages for controlling certain parameters and characteristics of the compressed audio and video data; however, end-user devices which are capable of handling this type of compressed data format are not currently as widespread as for MPEG-4.

In contrast, MPEG-4 uses a system of "media objects", in which video data is divided into these objects for transmission to the end-user device. These media objects may include non- moving (still) images, video objects (such as a moving figure) and audio objects, as well as other types of objects. These objects are then integrated for being played back to the end user by the end-user device. MPEG-4 is widely available on end-user devices, particularly for those devices which are operated with one of the Windows™ operating systems (Microsoft, USA), as certain versions of this operating system incorporates a media player which is capable of decompressing and playing back video data which has been compressed according to MPEG-4. Generally, MPEG-4 has been designed to be suitable for compression of video data through the Internet/World Wide Web and for mobile computational devices, such as WAP (wireless application protocol) enabled cellular telephones for example. Thus, currently end-user devices are restricted according to the type of video data compression which they can receive and/or generate, because of the limitations of each of the above methods of video data compression.

SUMMARY OF THE INVENTION The background art does not teach or suggest a method or system for efficient video compression which is suitable for a wide range of end-user computational devices and which is able to perform multi-stage compression within a single device or other implementation.. The background art also does not teach or suggest such a method or system which uses the best qualities of more than one type of MPEG compression. The present invention overcomes these deficiencies of the background art by providing a system and method which is useful for providing compressed video data to a wide range of end- user computational devices. The present invention has been shown to be more efficient in the degree of compression of the video data which is achieved than other background art methods and devices, while still providing a solution which is capable with a wide range of commonly available end-user devices. In addition, the present invention is also adaptable to many different types of end-user computational devices.

The present invention achieves these goals by providing a system and method which are an integrated solution for capturing an analog video signal, processing this signal to form processed video data, and efficiently compressing the video data. The compressed video data can then optionally be stored as a digital file and/or streamed directly as a digital signal over a network, such as the Internet for example. The present invention compresses the video data in a plurality of stages, including at least one stage for processing the analog data in order to increase the efficiency of compression, and a second stage for actual compression of the resultant digital video data.

More preferably, the first stage also includes compression of the video data after digitization of the analog signal. Optionally and more preferably, the compressed video data from the first stage is decompressed before being recompressed in the second stage. Most preferably, the method of compression of the video data for the first stage is performed according to MPEG-2, while the method of compression of the video data for the second stage is performed according to MPEG-4. According to the present invention, there is provided a method for compressing video data, comprising: processing an analog video signal according to at least one parameter for determining at least one characteristic of the resultant compressed video data, including converting the processed analog video signal into digital video data; and compressing the digital video data to form the compressed video data, wherein at least one characteristic of the resultant compressed video data is determined according to the at least one parameter.

According to another embodiment of the present invention, there is provided a system for compressing video data, comprising: (a) an analog output device for producing an analog video signal; (b) an integrated hardware device for capturing the analog video signal, processing the analog video signal to form digital video data, and for compressing the digital video data in two stages to form compressed video data; and (c) a computational device for controlling the integrated hardware device and for being in communication with the integrated hardware device. Hereinafter, the term "computational device" includes, but is not limited to, any type of wireless device and any type of computer. Examples of such computational devices include, but are not limited to, handheld devices, including devices operating with the Palm OS®; hand-held computers, PDA (personal data assistant) devices, cellular telephones, any type of WAP (wireless application protocol) enabled device, portable computers of any type and wearable

computers of any sort, which have an operating system. An end-user device is any type of computational device which is used for playing back video data to a user.

The present invention could be implemented in software, firmware or hardware. The present invention could be implemented as substantially any type of integrated circuit or other electronic device capable of performing the functions described herein. Also, the present invention can be described as a plurality of instructions being executed by a data processor.

BRIEF DESCRIPTION OF THE DRAWINGS

The invention is herein described, by way of example only, with reference to the accompanying drawings, wherein:

FIG. 1 is a schematic block diagram showing an exemplary system according to the present invention for two-stage video compression;

FIG. 2 shows exemplary architecture of a video card for insertion into a computational device according to the present invention; and FIG. 3 is a flowchart of an exemplary method according to the present invention for operation with the system of Figure 1.

DESCRIPTION OF THE PREFERRED EMBODIMENTS

The present invention is of a system and method for capturing an analog video signal, processing this signal to form processed video data, and efficiently compressing the video data. The compressed video data can then optionally be stored as a digital file and/or streamed directly as a digital signal over a network, such as the Internet for example. The present invention compresses the video data in a plurality of stages, including at least one stage for processing the analog data in order to increase the efficiency of compression, and a second stage for actual compression of the resultant digital video data.

More preferably, the first stage also includes compression of the video data after digitization of the analog signal. Optionally and more preferably, the compressed video data from the first stage is decompressed before being recompressed in the second stage. Most preferably, the method of compression of the video data for the first stage is performed according to MPEG-2, while the method of compression of the video data for the second stage is performed according to MPEG-4.

According to preferred embodiments of the present invention, there is provided a system featuring a set of integrated hardware and software components, preferably including at least a hardware card with a PCI interface installed on a card slot which is compatible with PC (personal computer) hardware, and digital-video compression software which communicates with the hardware card. Examples of a card slot which is compatible with PC hardware includes but is not limited to, card slots structured according to the USB (universal serial bus) architecture standard, the IEEE 1394 ('FireWire') standard, and the ISA (industry standard architecture) standard. These components are preferably connected to a network, optionally an IP network such as a LAN, WAN and/or the Internet. Optionally and more preferably, these hardware and software components are combined into a single device, such as a set-top-box for example, which most preferably can be used to perform any one or more of the following tasks: capture a live feed from a video camera and transmit live streaming video data in real time over a network; capture live feed from a video camera and store the resultant video data as a file; capture any data from a playback device and transmit such captured data; and capture any data from a playback device and store such data as a file. The data which is captured from the playback device, and which forms the input to the present invention for processing and compression, is actually preferably an analog signal, which may be in various formats, including but not limited to, VHS, Super VHS, and BetaCam. The video data is in a format which is suitable for storage and/or play back by a computational device.

According to other preferred embodiments of the present invention, an application layer is more preferably featured. The application layer is laid over the above system, and interacts with the components of the system in order to provide various types of functionality, for example through software program modules. Examples of these different types of functionality include, but are not limited to, security and surveillance, entertainment, and UI (User Interface) with which the system operator (user) interacts in order to operate the system of the present invention. The system operator is distinguished from the end user according to the type of interaction with the system; the former individual controls the various parameters for capturing and transmitting the video data, while the latter controls playing back the video data. Of course, the system operator and the end user could optionally be the same individual, for example when video data is played back for the system operator.

These preferred components of the system of the present invention are more preferably used to perform a method according to the present invention for compressing video data. The result of the method of the present invention is a significantly low data-rate output per given input and quality parameters (picture size, color type and depth etc.). In other words, the captured file is significantly smaller in comparison to other processes with the same quality parameters; and the required streamed bandwidth is much lower than the output of background art streaming solutions, as shown by the test results below.

The principles and operation of a method and a system according to the present invention may be better understood with reference to the drawings and the accompanying description. Turning now to the drawings, Figure 1 shows an exemplary system according to the present invention for performing video compression in a plurality of stages. As shown, a system 10 preferably features at least an integrated device 12 according to the present invention. Optionally, integrated device 12 is implemented as a plurality of separate devices, although this implementation is less preferred. Integrated device 12 is connected to a source of a video signal, whether analog or digital, which is preferably a video camera 14 as shown, but which could also be a VCR or other device for recording, playing back, storing and/or generating video signals. Integrated device 12 then captures the video signals, and compresses these signals into compressed video data according to a process described in greater detail below, particularly with regard to the exemplary method of Figure 3. The compressed video data is then preferably transmitted through a network 16, such as a

LAN, WAN or the Internet for example, to a remote end-user device 18 as shown. End-user device 18 then decompresses and plays back the video data to the end user.

According to preferred embodiments of the present invention, integrated device 12 features a hardware card 20 according to the present invention, which is shown in more detail with regard to Figure 2 below. Hardware card 20 optionally and preferably communicates with an application layer 22, which is a unique software driver. Integrated device 12 is optionally and more preferably implemented as a computer, such that application layer 22 functions as the 'translator' between hardware card 20 and an operating system installed on integrated device 12. Integrated device 12 therefore also optionally and more preferably features a storage device 24, such as a flash memory device and/or a magnetic storage medium device or "hard drive", and a network card 26 for connecting to network 16. Integrated device 12 may also optionally feature

user input & output devices, including but not limited to a keyboard, mouse or other pointing device, and a monitor (not shown).

Application layer 22 also preferably features a final encoding unit 28, which is more preferably implemented as an external software component which reads the data from hardware card 20, converts such data to its final format and delivers the data for distribution through network 16.

Optionally, application layer 22 is implemented as a software layer, which is installed on integrated device 12. Also optionally, application layer 22 may feature several different types of software functions, each of which performs a different task and each of which interacts with hardware card 20. Application layer 22 more preferably controls the functionality and performance that can potentially be utilized by using hardware card 20, and also uses hardware card 20 for capturing, storing and/or transmitting video data over network 16.

For example, application layer 22 may optionally feature such functions as a User Interface, which provides control over the modifiable parameters in the video/audio capturing and transmitting process, and also provides control over other operational applications which use hardware card 20; a security application; a surveillance application; and an entertainment application.

The user interface is optionally and more preferably provided to the system operator (user) through a system operator station 30, which is a computational device connected to integrated device 12. System operator station 30 enables the system operator to control the parameters of the compression process, and also enables the system operator to control the functions of integrated device 12. System operator station 30 preferably features a system operator UI 32, for communicating with hardware card 20 and application layer 22 of integrated device 12; and a local playback device 34 for receiving video data for playback from storage device 24.

Figure 2 shows an exemplary architecture of the hardware card for capturing, compressing and/or transmitting video data of Figure 1 in more detail. Hardware card 20 preferably features an input-device unit 36, which can optionally be attached to the remainder of hardware card 20 through a composite connection, S-Video connection, or any other analog/digital connection for connecting to integrated device 12 (not shown; see Figure 1). The input format can be NTSC (National TV Standards Committee) or PAL (Phase Alternating Line), for example. NTSC is a color TV standard that was developed in the U.S. and which is

currently used in the U.S. for television broadcasts. NTSC broadcasts 30 interlaced frames per second (60 half frames per second, or 60 "fields" per second in TV jargon) at 525 lines of resolution. The signal is a composite of red, green and blue and includes an audio FM frequency and an MTS signal for stereo. PAL is a color TV standard that was developed in Germany and is used throughout Europe. It broadcasts 25 interlaced frames per second (50 half frames per second) at 625 lines of resolution. The color signals for PAL are maintained automatically, and the television set does not have a user-adjustable hue control. Input-device unit 36 is the physical connection point to integrated device 12 as a whole.

The connection-plugs for input-device unit 36 are preferably industry standard connectors, and are optionally third party components which can be purchased "off the shelf. These connectors are standard input connectors and could optionally be implemented as RCA connectors for example. RCA connectors are a standard plug and socket for a two-wire (signal and ground) coaxial cable that is widely used to connect audio and video components. Optionally and more preferably, there are four connection plugs 37 on hardware card 20, optionally according to one of the following combinations: 4 x Composite connections; or 3 x Composite connections + 1 x S-Video connection. Composite connectors rely upon the recording and transmission of video which mixes the color information and synchronization signals together; particular RCA connectors are commonly used for composite video on VCRs, camcorders and other consumer appliances. S-video (Super-video) is a format for recording and transmitting video by keeping luminance (Y) and color information (C) on separate channels. S- video uses a special 5-pin connector. Connectors of this type are widely used on camcorders, VCRs and A/V receivers and amplifiers, as the format can improve transmission quality and the image at the receiving end.

Hardware card 20 also preferably features a capturing and initial encoding unit 38, for performing at least one, but preferably all, of the following tasks. First, capturing unit 38 receives the analog signal from input-device unit 36 and digitizes the analog signal. The output of this process is the same original signal in a digital format.

Second, capturing unit 38 compresses the video data by using a compression algorithm, which is optionally and more preferably performed according to MPEG 2. The output of this stage in the process is compressed digital data. The MPEG 2 compression format is preferred since it enables some parameters of the output result to be controlled and modified, such as picture size, frame-rate etc. By using system operator UI 32 (not shown; see Figure 1), the

system operator can optionally and preferably modify those parameters, which are then set for the chip operation by the driver for hardware card 20, through the operation of application layer 22. The default picture-size of the output result is optionally and more preferably 'Half-Dl ', which is 320 x 240 pixels per frame. Optionally and most preferably, capturing unit 38 is implemented as a single chip and a memory buffer unit 40. Memory buffer unit 40 is preferably implemented as a third party chip which also functions as a temporary data-storage in the process. Memory buffer unit 40 preferably controls the supply and demand of the data to capturing unit 38.

Hardware device 20 is also preferably in communication with a card driver (not shown), which is a software library for controlling the interaction of hardware device 20 with the external environment, such as the PCI BUS and the operating system of integrated device 12. The card driver is also preferably used for configuration of hardware device 20.

In addition, hardware device 20 also preferably features a final encoding unit 28 (not shown; see Figure 1), which is an external software component for reading the compressed-data that was processed by capturing unit 38. This 'reading' action is actually optionally and more preferably performed by 'playing-back' the compressed data, so the input to final encoding unit 28 is thus more preferably a decompressed digital feed. Final encoding unit 28 preferably then re-compresses the decompressed feed by using compression algorithms, which can be standard compression algorithms. Optionally and more preferably, compression is performed according to MPEG 4.

Final encoding unit 28 is optionally third party software such as Microsoft Windows™ Media Tools, which involves the Microsoft Windows Media SDK (Software Development Kit)™, Windows Media Encoder™ and other components that are a part of the Windows™ operating system, such as the Microsoft DirectShow™ filters. The output result of this stage is more preferably a re-compressed digital data in MPEG 4 format, optionally and most preferably wrapped with some specific layers conducted by the encoding environment, which in this case is the Microsoft Windows Media Tools™. At this time, the final data format is one of the file or stream types that the Microsoft Windows™ environment produces, which are *.ASF, *.WMV or *.WMA. The output data can than be transferred to one of the external environment components, such as storage device 24 of integrated device 12 (not shown; see Figure 1), an external storage device (not shown) or NIC 26 of integrated device 12 (not shown; see Figure 1).

MPEG encoding unit 46 performs MPEG-2 compression, which is more preferably implemented as a single chip (such as the Fusion™ bt878A chip of Conexant USA for example). Capturing unit 38 may also optionally be implemented on that chip. If purchased "off the shelf, the software driver for such a chip is optionally and preferably used as the communicating interface to the remainder of hardware card 20. MPEG-4 compression is preferably performed by a software application, which as shown in Figure 1 is final encoding unit 28, and is more preferably not located on hardware card 20, but instead is operated by integrated device 12 (not shown; see Figure 1) and/or another computational device.

Hardware card 20 also more preferably features an interface 48 to integrated device 12 itself (not shown; see Figure 1), which is most preferably implemented as a PCI bus interface. In addition, power is also preferably supplied through interface 48, and is optionally and more preferably controlled through a power controller 50.

Figure 3 shows an exemplary method for operation with the present invention. The following diagram describes the workflow for the system of Figures 1 and 2, with the optional basic input and output components.

As shown, an initial analog signal input 52 is received from the video camera or other video capture device of Figure 1 (shown as an input unit 54). The analog signal could optionally be in the form of a composite or S-video signal, for example. More preferably, there are two sets of video input signals: a first set featuring four separate composite RCA inputs and a second set with on S-video input signal.

Next, an input device unit 56 of a capturing and initial encoding unit 58 receives the analog signal. This signal is processed according to the configuration set by the system driver which 'defines' the input source and the input format. The parameters for this capture process may optionally and preferably be determined by system operator through a system operator interface 62 as a set of capturing parameters 64. These capturing parameters 64 would then be used for the subsequent operations for the formation of the digital signal. The analog signal is optionally stored temporarily in a data buffer 66 for the conversion process to form an uncompressed digital signal 68 by a capturing unit 70. Various different formats for the input video analog signal may optionally be used, including but not limited to, and PAL, NTSC. These different formats may also optionally be used concurrently. For PAL video signals, the frame rate is optionally and preferably 25 Fps (frames per second), while for NTSC video signals, the frame rate is optionally and preferably 30 Fps. Multiple output resolutions are also

optionally possible for each of these different formats: for example, PAL video data could optionally be output at resolutions of 192x144; 384x288; and 768x576 pixels per frame; while NTSC video data could optionally be output at resolutions of 160x120; 320x240; and 640x480 pixels per frame. Of course, these frame rates, resolutions and video standards are only intended as examples, as many other different implementations are possible within the scope of the present invention.

Different types of image formats for output image data are also possible within the scope of the present invention, such as YUV 12, YUV 2, YUV9, 15 bit RGB, 24 bit RGB, 32 bit RGB A and BTYU, all of which are different examples of color models used for encoding video data. Y is the luminosity of the black and white signal. U and V are color difference signals. U is red minus Y (R-Y), and V is blue minus Y (B-Y). In order to display YUV data on a computer screen, it must be converted into RGB through a process known as "color space conversion." YUV is used because it saves storage space and transmission bandwidth compared to RGB. YUV is not compressed RGB; rather, it is the mathematical equivalent of RGB. Also known as component video, the YUV elements are written in various ways: (1) Y, R-Y, B-Y, (2) Y, Cr, Cb and (3) Y, Pa, Pb. Certain types of equipment provide YUV component video outputs, which are a typical set of connectors using standard RCA connectors.

An initial encoding unit 72 then preferably receives instructions for encoding digital signal 68 for the first stage of compression. These instructions 74 optionally include values for such parameters as encoded picture size and output format, which are optionally and preferably received from the system operator as initial encoding parameters 76. After initial encoding unit 72 performs the first stage of encoding, more preferably according to MPEG-2 and most preferably resulting in the formation of digital MPEG data in the "half-Dl" format, the resultant data is optionally stored in a buffer 78. The data is then preferably decompressed to form processed raw digital data which is passed to a final encoding unit 80.

Final encoding unit 80 then preferably performs the final compression of the data for transmission over a network such as the Internet. The parameters for controlling this compression process are preferably received from the system operator as final encoding parameters 82, and optionally ^nd more preferably include such parameters as encoded picture size and encoded file/stream type 84.

The distribution of the compressed data to a final destination(s) as a digital signal 88, such as an end-user computational device for example, is optionally and preferably controlled by

distribution parameters 86. These distribution parameters are more preferably received from the system operator.

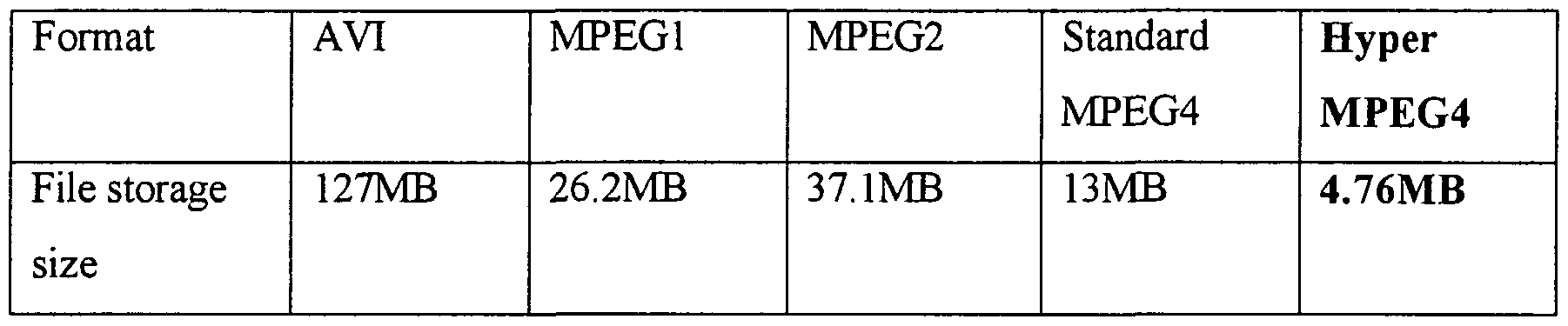

The device and system of the present invention, as constructed with regard to the preferred embodiments of Figure 2 and as operated with regard to the preferred embodiments of Figure 3, clearly showed better performance in comparison to background art devices when tested for the ability to compress data for storage. Test video data was derived, with a single quality and picture resolution, which was one minute in length. The table below shows the results for the present invention (labeled as "Hyper MPEG4"), in comparison to devices operating according to AVI, MPEGl and MPEG2, and standard MPEG4 (that is, compression only with MPEG4, as opposed to the preferred embodiments of the present invention which incorporate compression according to both MPEG2 and MPEG4).

According to optional but preferred implementations and applications of the present invention, the system and method of the present invention may be optionally and preferably be used for both on-line video broadcasting and for off-line video broadcasting. On-line video broadcasting involves the direct streaming transmission of video data to a distribution point, such as an end user device for example, after the video data has been captured, processed and compressed. Off-line video broadcasting is similar, except that the compressed video data is stored locally and/or transmitted to a remote storage device for future distribution or playback. In order to provide these different functions, as previously described, the system of the present invention features an application layer. Preferably, this application layer contains software functions which fall into two categories: the system operating environment; and the system service support functions.

The system operating environment preferably includes all of the previously described system core functions, together with the ability to configure these functions, operate them and also optionally connect to higher level applications, such as the system service support functions.

Examples of such basic operating environment functions include but are not limited to: a "plug and play" mechanism, which enables the hardware card of the present invention to be recognized by the operating system of the integrated device as a new hardware device, attached through the PCI interface; settings and system parameters which are set by the system operator and which are optionally protected with a password; and an authentication mechanism to protect features, settings and configuration of the system. In addition, various types of transmission options are provided as previously described, including direct transmission of data, storage of data or a combination thereof.

Optionally and more preferably, archiving management software is provided for managing the storage of the captured and compressed data. These archiving functions may optionally include a file search engine, for searching for video data by file; the provision of either automatic or manual determination of file names; sorting files by name, date, time and/or other file characteristics; uploading files to a remote distribution point Or end user device automatically or manually, with automatic uploading being performed according to a pre-defined policy and scheduling; and playing back file data upon request.

Other functions of the system of the present invention may also optionally be automatically scheduled. In addition or alternatively, different functions within the system of the present invention may also optionally be controlled remotely.

According to another preferred embodiment of the present invention, the system can optionally support a point-to-point videoconference through an external software application, such that the hardware card of the present invention is used as the capturing device for a video/audio conference session. Examples of such external software applications include but are not limited to 'Windows™ 2000 dialer', 'NetMeeting™', and 'CUSeeMe™'.

According to still another preferred embodiment of the present invention, the system can optionally support a "live" help desk connection, such that the system operator and/or end user is able to directly access a customer-support center, for example using the previously described video conference, chat and/or telephone communication. These may be implemented by using standardized video-conference software applications which are known in the art; however the efficiency of operation of these applications is greatly increased according to the present invention since the various parameters for processing and compressing the video data are provided to these applications, which can thus be adjusted for optimal performance with the present invention.

It should be noted that although the previous description centers around the capture, processing and compression of video data, the present invention may also optionally be used for separate compression of video/image and audio data. In addition, it is also assumed that the previous references to "video" data include combined audio and video data, where appropriate.