Performance testing measures how well systems respond when subjected to a variety of workloads. The key qualities being tested for performance are stability and responsiveness. Performance testing shows the robustness and reliability of systems in general, along with specific potential breaking points. k6 is an open source framework built to make performance testing fun for developers.

In this tutorial, you will use k6 to do load testing on a simple API hosted on the Heroku platform. Then you will learn how to interpret the results obtained from the tests. Along the way, you will learn how to automate your k6 tests in a continuous integration (CI) pipeline to get consistent, reliable feedback as you make updates to your API.

This tutorial is a companion to HTTP request testing with k6.

Prerequisites

To follow this tutorial, you will need:

- Basic knowledge of software testing

- A GitHub account

- A CircleCI account

- A Grafana Cloud k6 account

As a performance testing framework, k6 stands out because of the way it encapsulates usability and performance. Tools like a command-line interface to execute scripts and a dashboard to monitor the test run results add to its appeal.

k6 is written in the goja programming language, which is an implementation of ES2015(ES6) JavaScript on pure Golang. That means you can use JavaScript to write k6 scripts, although language syntax will only be compatible with the JavaScript ES2015 syntax.

Note: k6 does not run on a Node.js engine. It uses a Go JavaScript compiler.

Now that you know what runs under the k6 hood, why not take on setting up k6 to run your performance tests?

Cloning the repository

First, clone the sample application from the GitHub repository.

git clone https://github.com/CIRCLECI-GWP/performance-testing-with-k6.git

The next step is to install k6 on your machine.

Installing k6

Unlike JavaScript modules, k6 must be installed using a package manager. In macOS you can use Homebrew. In Windows OS, you can use Chocolatey. There are more installation options available for other operating systems in the k6 documentation. For this tutorial we will follow the macOS installation guide. Execute this command in Homebrew:

brew install k6

This is the only command you need to run to get started with writing k6 tests.

Writing your first performance test

Great work! You have installed k6 and know how it works. Next up is understanding what k6 measures and what metrics you can use to write your first load test. Load testing models the expected usage of a system by simulating multiple users accessing the system at the same time. In this case, you will be simulating multiple users accessing the API within specified time periods.

Load testing our application

The load tests in the examples measure the responsiveness and stability of the API system when subject to different groups of users. The example tests determine the breaking points of the system, and acceptable limits.

In this tutorial, you will be running scripts against a k6 test file that first creates a todo in an already hosted API application on Heroku. For this to be considered a load test, you must include factors like scalable virtual users, scalable requests, or variable time. This tutorial focuses on scaling users to the application as the tests run. This feature comes pre-built with the k6 framework, which makes it super easy to implement.

To simulate this, you will use the k6 concept of executors. Executors supply the horsepower in the k6 execution engine and are responsible for how the scripts execute. The runner may determine:

- Number of users to be added

- Number of requests to be made

- Duration of the test

- Traffic received by the test application

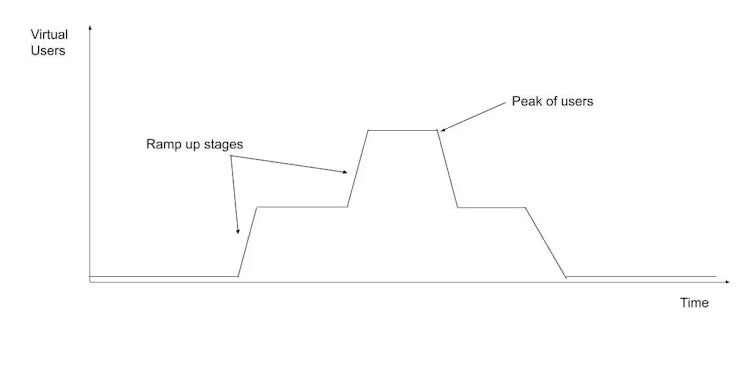

In the first test, you will use a k6 executor with an approach that k6 refers to as ramping up. In the ramping up approach, k6 incrementally adds virtual users (VUS) to run a script until a peak is reached. It then gradually decreases the number within the stipulated time, until the execution time ends.

Write this load test to incorporate the idea of executors, virtual users, and the test itself. Here is an example:

import http from 'k6/http';

import { check, group } from 'k6';

export let options = {

stages: [

{ duration: '0.5m', target: 3 }, // simulate ramp-up of traffic from 1 to 3 virtual users over 0.5 minutes.

{ duration: '0.5m', target: 4}, // stay at 4 virtual users for 0.5 minutes

{ duration: '0.5m', target: 0 }, // ramp-down to 0 users

],

};

export default function () {

group('API uptime check', () => {

const response = http.get('https://aqueous-brook-60480.herokuapp.com/todos/');

check(response, {

"status code should be 200": res => res.status === 200,

});

});

let todoID;

group('Create a Todo', () => {

const response = http.post('https://aqueous-brook-60480.herokuapp.com/todos/',

{"task": "write k6 tests"}

);

todoID = response.json()._id;

check(response, {

"status code should be 200": res => res.status === 200,

});

check(response, {

"response should have created todo": res => res.json().completed === false,

});

})

});

In this script, the options object gradually increases the number of users within a given time in the defined stages. Though the executor has not been defined by default, k6 identifies the stages, durations, and targets in the options object, and determines that the executor is ramping-vus.

In the load test script, our target is a maximum of 4 concurrent users within a period of 1m 30 seconds. The script will therefore increase application users gradually and carry out as many create-todo request iterations as possible within that time frame.

In other words, we will attempt to make as many requests as possible within the specified time frame while varying the number of active virtual users with the provided stages. The success of this test will depend on the number of successful requests made by the application inside the time period. Here is a graph of virtual users of the test against time:

This ramp-up graph illustration shows that as time increases, virtual users are incrementally added to the load test until the peak number of users is reached. That number is reached in the final stage as the virtual users are completing their requests and exiting the system. For this tutorial, the peak number of users is 4. The ramping-vus executor type is not the only one available to run load tests. Other executors are available in k6. Usage depends on the need and nature of the performance test you want to execute.

The example performance test script shows that you can use k6 to define your test, the number of virtual users that your machine will run, and also create different assertions with check() under group() blocks for the execution script.

Note: Groups in k6 let you combine a large load script to analyze results of the test. Checks are assertions within groups, but work differently from other types of assertions as they do not halt the execution of the load tests when they fail or pass.

Running performance tests using k6

Now you are ready to start executing tests that will help you understand the performance capabilities of your systems and applications. You will test the limits of your Heroku free dyno and the hosted Todo API application deployed on it. While this is not an ideal server for running load tests, it does let you identify when and under what circumstances the system reaches its breaking point. For this tutorial, the breaking point could appear in Heroku or your API application. This test will make a multiple HTTP requests with virtual users (VUS) to Heroku. Starting with one virtual user and ramping up to 4, the virtual users will create todo items for a duration of 1 minute and 30 seconds.

Note: We are making the assumption that Heroku is able to handle 4 concurrent sessions of users interacting with our API.

To run the performance test from the previous code snippet, just run this command on your terminal:

k6 run create-todo-http-request.js

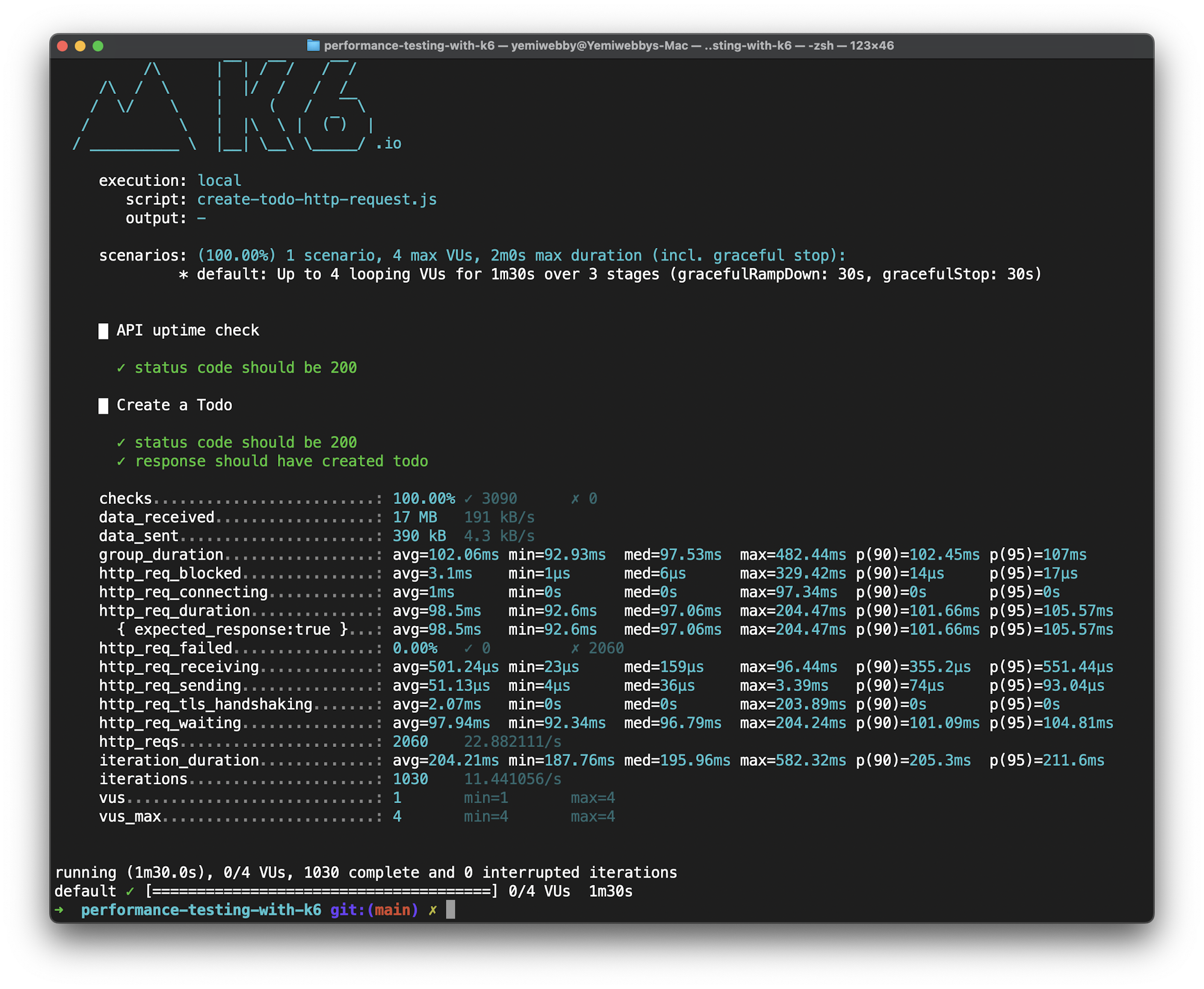

When this test has finished, you will have your first results from a k6 test run.

Use the results to verify that the performance test executed for 1 minute and 30.3 seconds and that all the iterations of the test passed. You can also verify that in the period that the test was executed, 4 virtual users made a total of 1030 iterations (completed the process of creating todo items).

Other metrics you can obtain from the data include:

checks, the number of completedcheck()assertions declared on the test (all of them passed)- Total

http_reqs, the number of all the HTTP requests sent out (2060requests) - Combination of the total number of iterations of creating new todo items and HTTP requests for checking the uptime state of our Heroku server

This test was successful, and you were able to obtain meaningful information about the application. However, you do not yet know if your system has any breaking points or if the application could start behaving strangely after a certain number of concurrent users starts using the API.

Adding another HTTP request will help you find out if you can break the Heroku server or even your application. You will also reduce the number of virtual users and the duration of the test to save on resources. These adjustments can be made in the configuration section by simply commenting out the last two stages of the execution.

Note: “Breaking the application” means overwhelming the system to the point that it returns errors instead of a successful execution, without changing resources or application code. That means the system returns errors based only on changing the number of users or the number of requests.

stages: [

{ duration: '0.5m', target: 3 }, // simulate ramp-up of traffic from 1 to 3 users over 0.5 minutes.

// { duration: '0.5m', target: 4 }, -> Comment this portion to prevent execution

// { duration: '0.5m', target: 0 }, -> Comment this portion to prevent execution

This configuration shortens the duration of execution and reduces the number of virtual users used to execute your test. Next, execute the same performance test you used previously but add an HTTP request to:

- Fetch the created

Todo - Verify that its

todoIDis consistent with what was created - Verify that its

stateof creation iscompleted: false

To execute this performance test, you will need the create-and-fetch-todo-http-request.js file, which is already in the root of the project directory in our cloned repository. Go to the terminal and execute this command:

k6 run create-and-fetch-todo-http-request.js

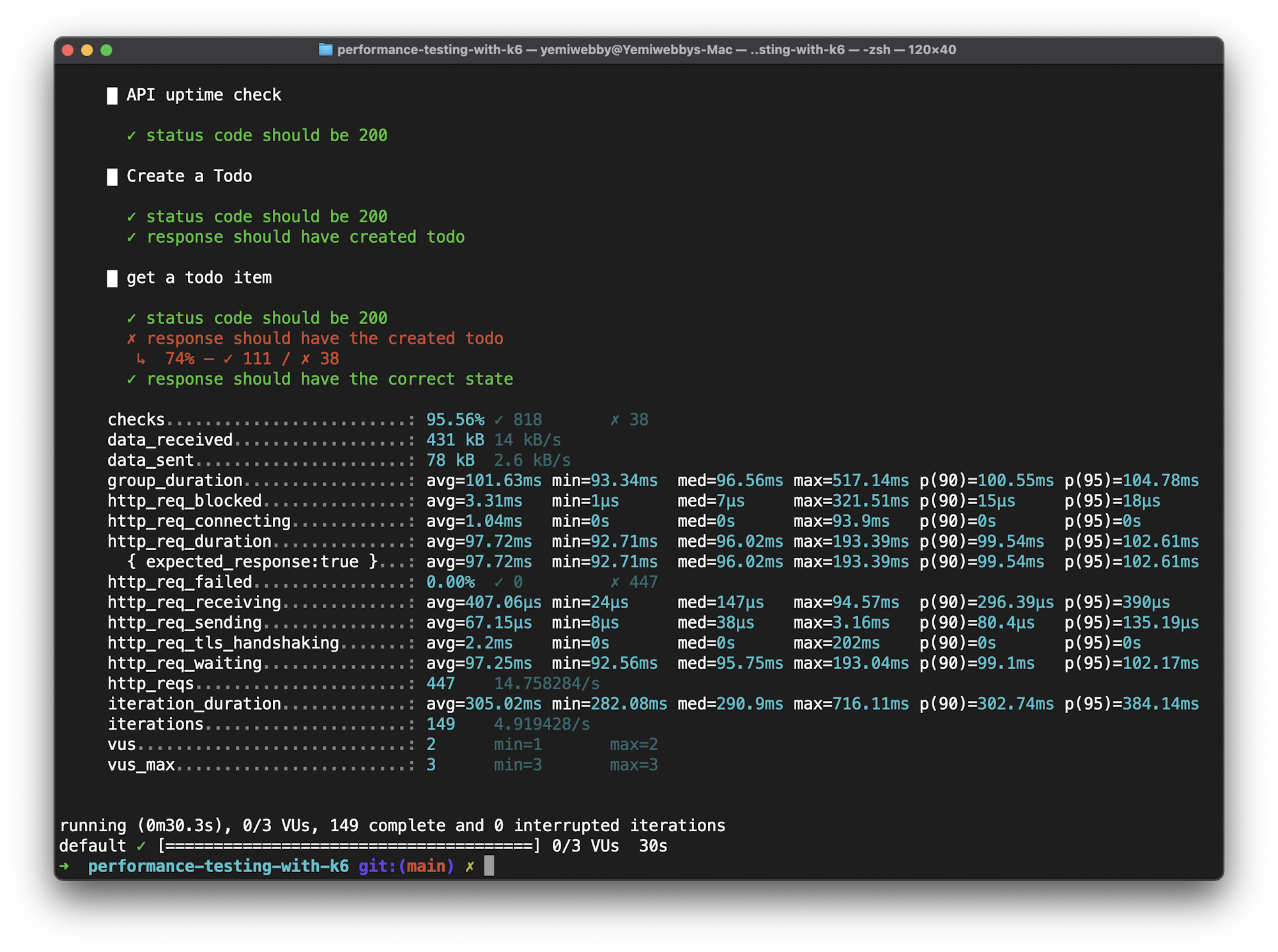

Once this command has completed execution, you will get the test results.

These results show that your test actually broke the limits of the free Heroku dynos for your application. Although there were successful requests, not all of them returned responses in a timely manner. That then led to the failure of some requests. Using only 3 constant virtual users and executing tests for 30 seconds, there were 149 complete iterations. Out of those 149, 95.56% of checks were successful.

The failed checks were related to the response of fetching the todoID of the created Todo items. This could be the first indicator of a bottleneck in the API or the system. You can verify that the http_reqs total is 447, the total of the requests from the creation of the todo items, fetching of the todo items data, and verifying the uptime of the Heroku server:

149 requests for each iteration * 3 - each of every request item

From these test runs, it is clear that performance tests metrics are not standard across the board for all systems and applications. These numbers may vary depending on the machine configuration, nature of the application, and even dependent systems for the applications under test. The goal is to ensure that bottlenecks are identified and fixed. You need to understand the type of load your system can handle so that you can plan for the future, including usage spikes and above-average demand for resources.

In the previous step, you were able to execute performance tests for the application hosted on a Heroku free plan and analyze the command line results. With k6, you can also analyze the results of performance tests using the cloud dashboard. The dashboard is a web-based interface that allows you to review the results of your performance tests.

k6 cloud configuration and output

Running k6 performance tests and getting the output on your terminal is great, but it is also good to share the results and output with the rest of the team. In addition to the many cool analysis features, sharing data is where the k6 dashboard really shines.

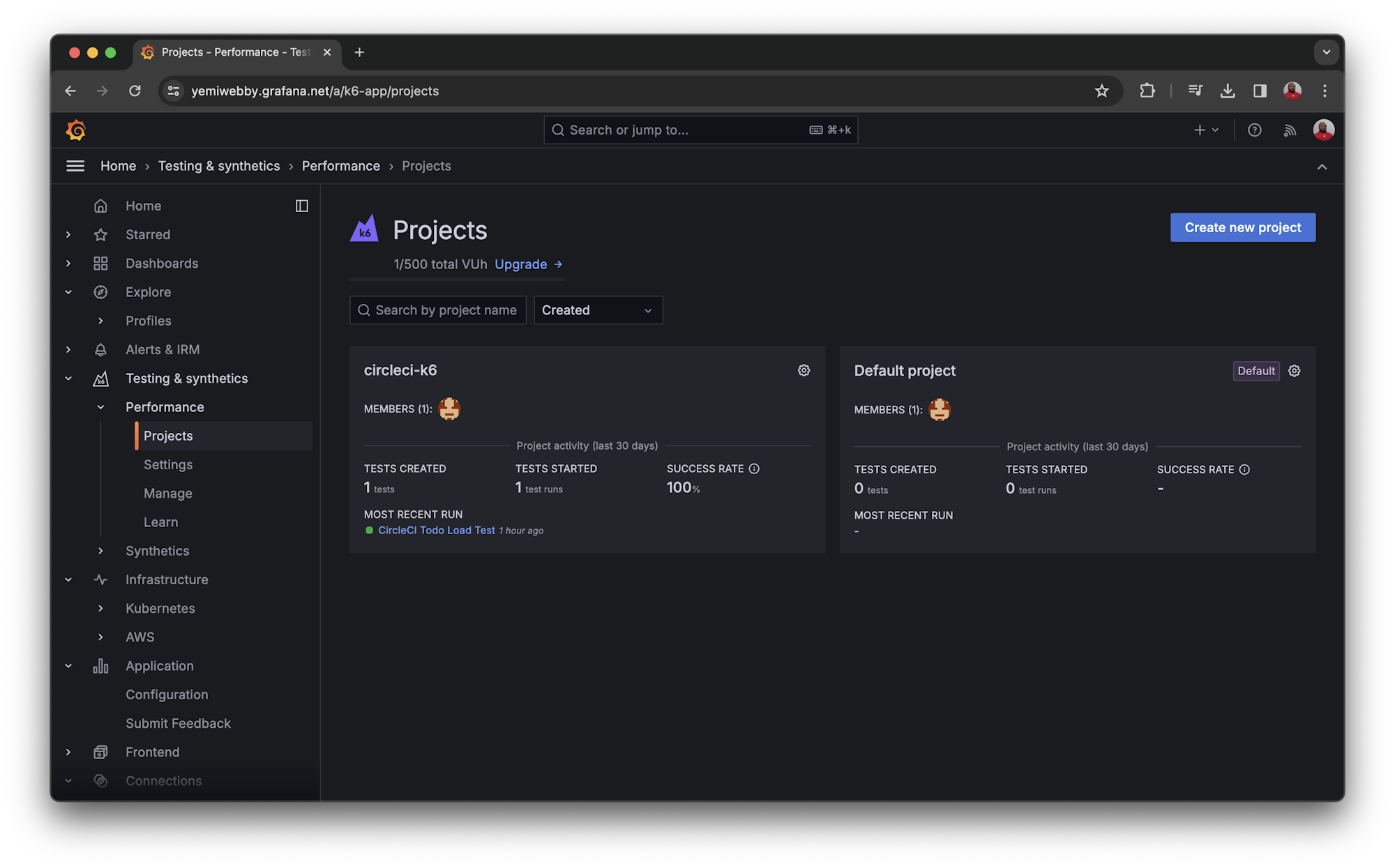

To configure the output for the k6 dashboard, log into the Grafana Cloud k6 with the account details you created earlier. Next, create a new project by navigating to the Testing & Synthetics tab in the sidebar.

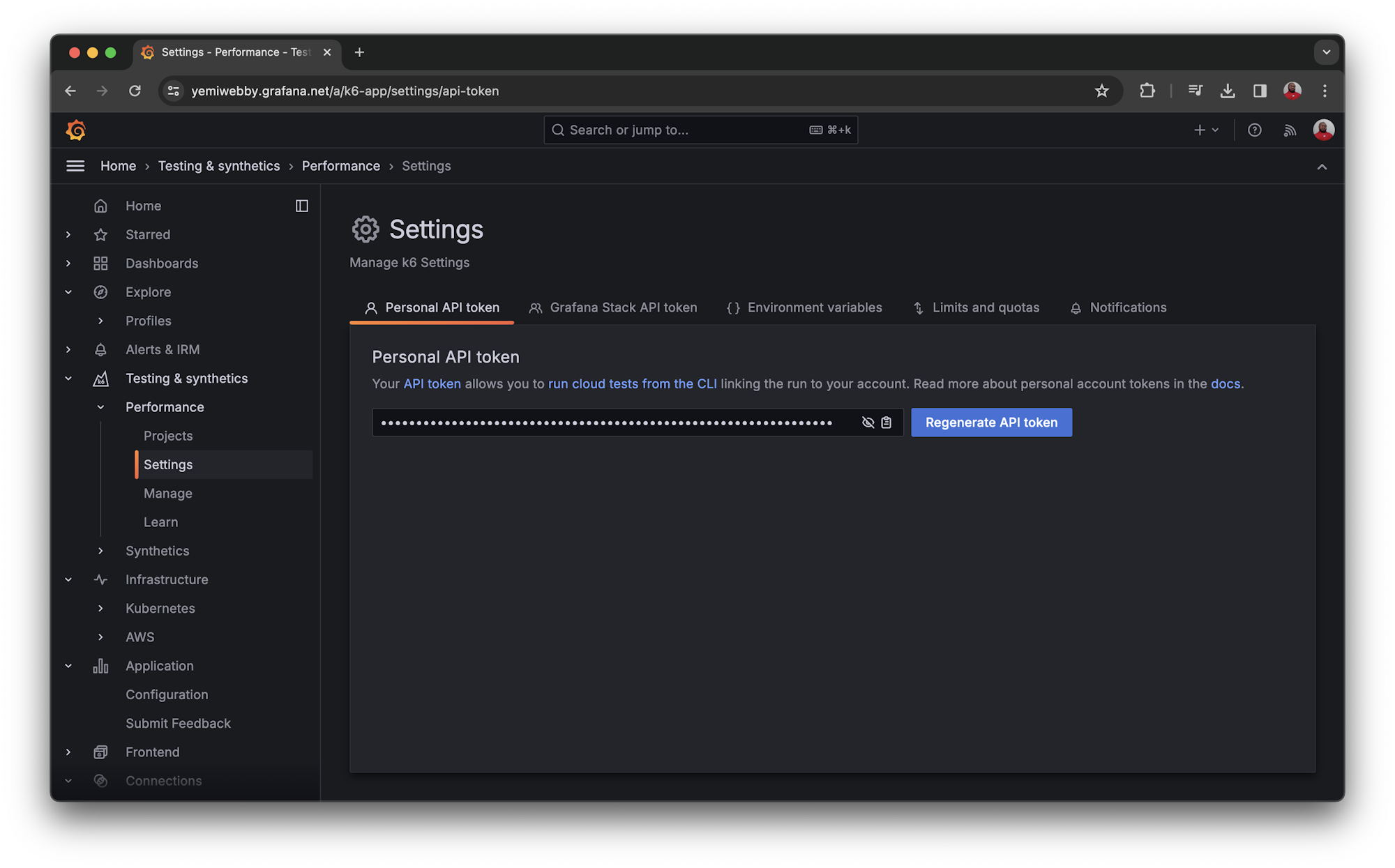

Now click Settings and copy your personal API token.

Once you have copied your API token, open the create-and-fetch-todo-http-request.js file and add update the options object to include the projectID:

export let options = {

...

ext: {

loadimpact: {

projectID: YOUR_PROJECT_ID,

// Test runs with the same name groups test runs together

name: "CircleCI Todo Load Test",

},

},

};

Next, add K6_CLOUD_TOKEN as an environment variable. Run this command in your terminal to set the k6 cloud token:

export K6_CLOUD_TOKEN=<k6-cloud-token>

Note: Replace the cloud token value in the example with the k6 token you just copied from the dashboard.

Now you can execute your load tests. Use this command:

k6 run --out cloud create-and-fetch-todo-http-request.js

--out cloud tells k6 to output results to the cloud. k6 automatically creates dashboards with test results when the execution starts. You can use the dashboard to evaluate the results of your performance tests.

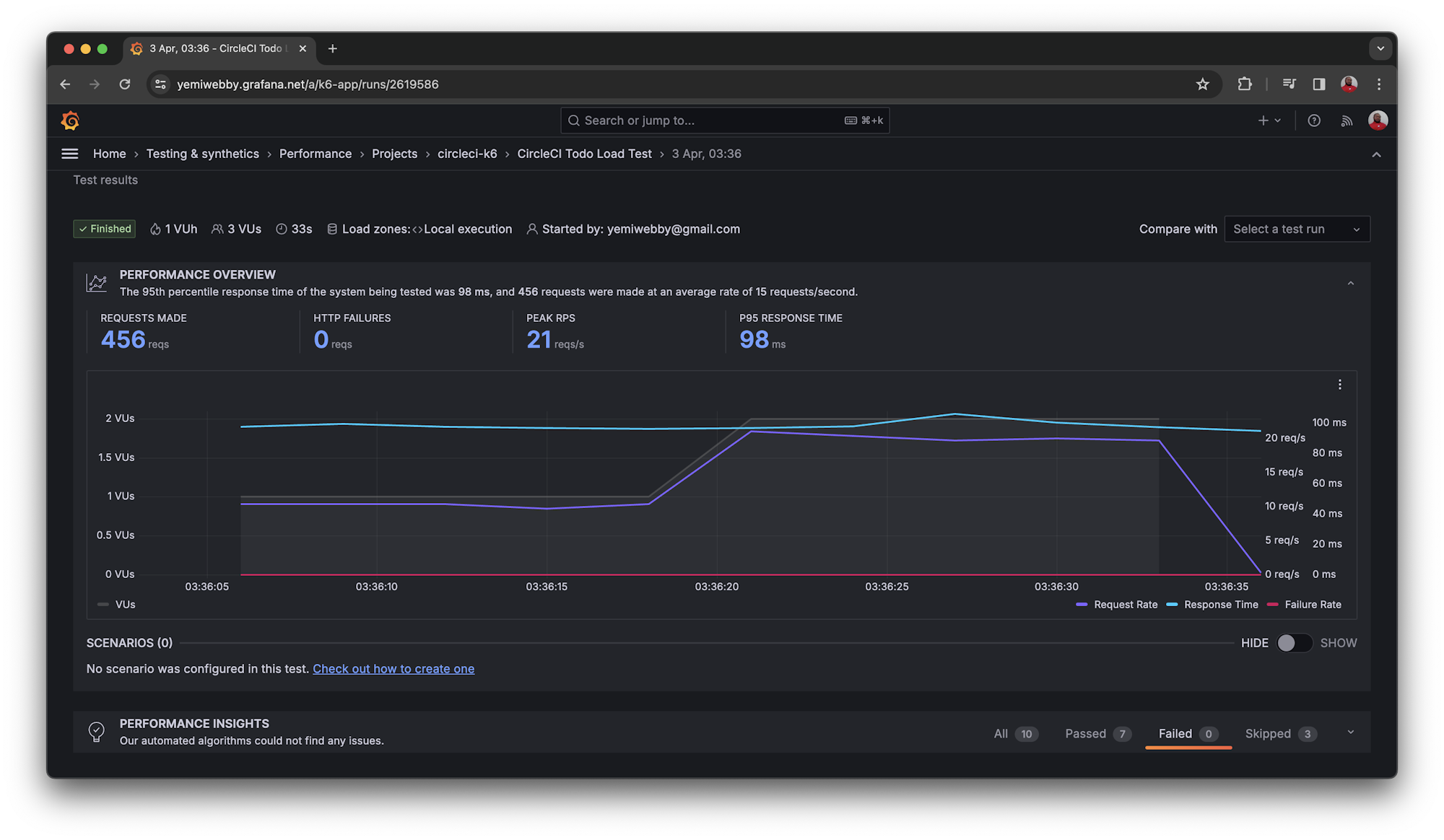

The dashboard makes interpretation of results more convenient and easier to share than terminal output. The first metrics from your uploaded run are the total number of request and how requests were made by different virtual users. There is also information about when different users were making requests.

This graph shows the total number of virtual users against the number of requests that were made, and the average response time of every request. It gets more interesting as you drill down on every request. The k6 dashboard makes this possible using groups() and checks().

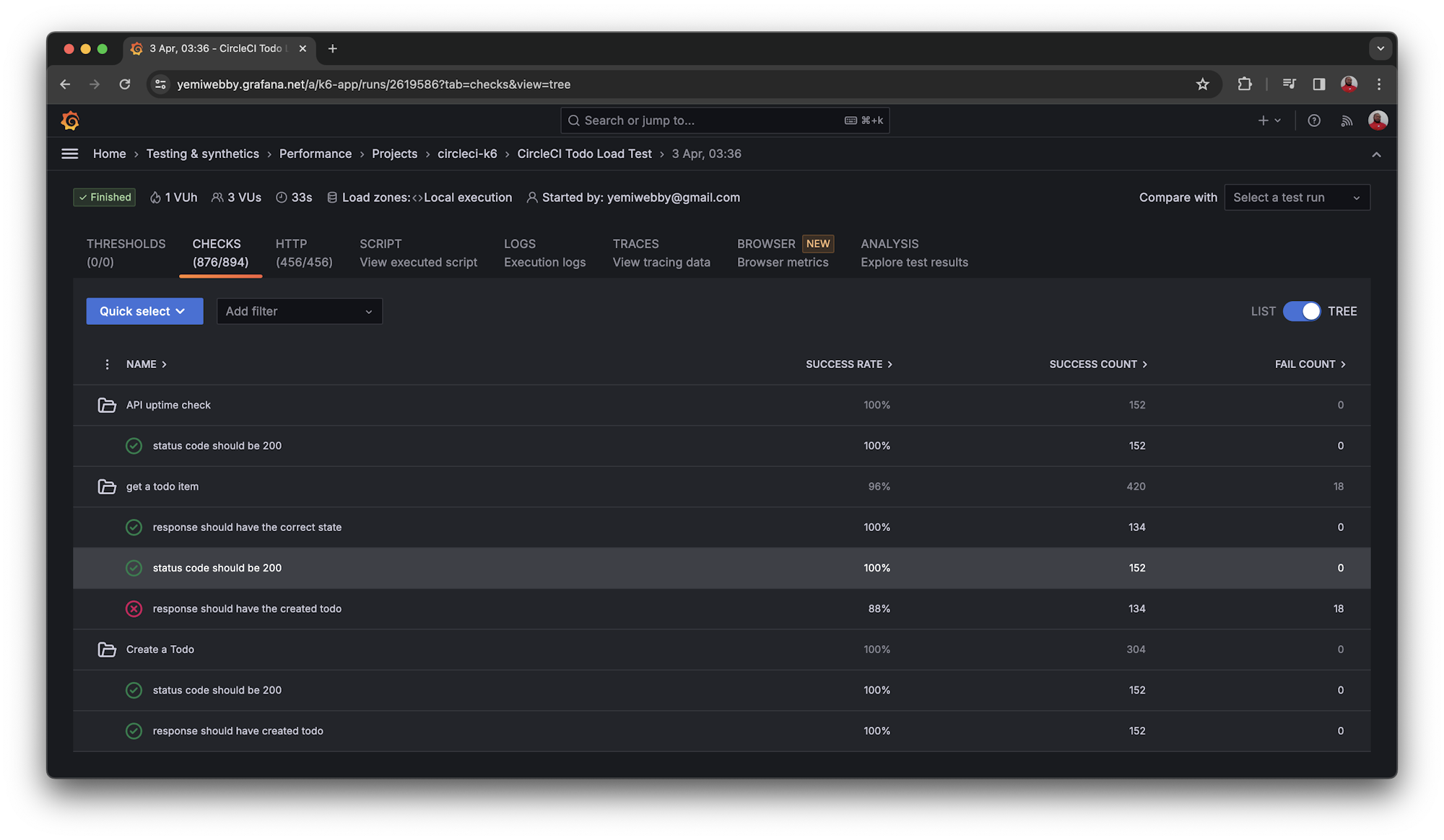

Try this: select the Checks tab, then filter the results using the tree view.

All the requests from the test run are listed in chronological order. The results also assess the failures and success execution rates with the average time it took for all the requests, including the total number of requests executed.

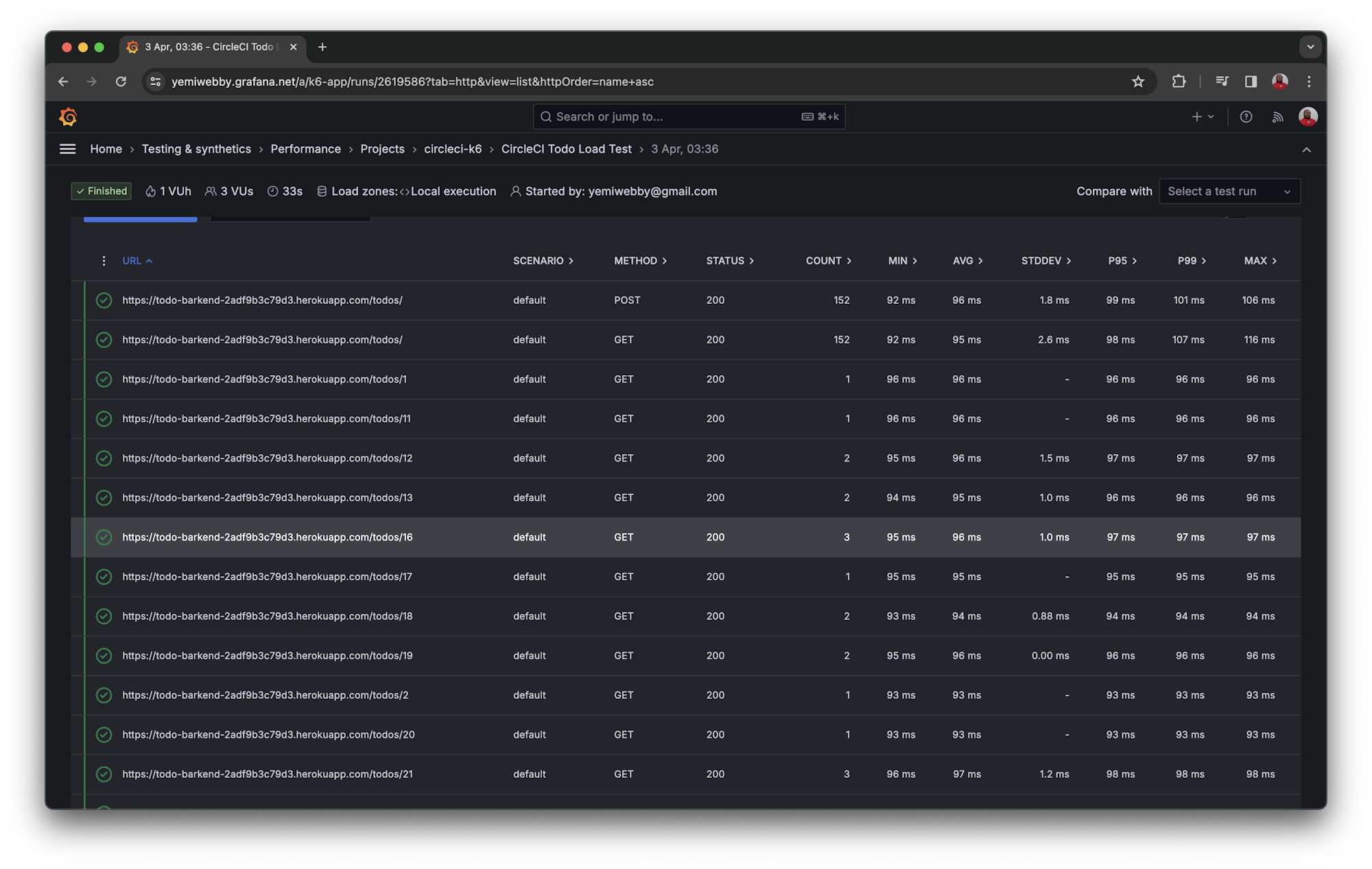

To evaluate the individual requests and their times, select the HTTP tab in the same performance insight page. You can review all the requests made, the time they took to execute, and how the execution compares with other requests. For example, one request falls into the 95th percentile, while another one was in the 99th. The dashboard shows the maximum amount of time it took to execute the longest request with a standard deviation figure for reference purposes. The page provides you almost any kind of data point that you and your team might use to identify bottlenecks.

You can also use the k6 dashboard to select a specific request and identify its performance against average run times. This would be useful in identifying issues like database bottlenecks caused by a large number of locked resources when multiple users are accessing the resources.

There is so much you and your team can benefit from using the power of the k6 dashboard.

To get the most out of your k6 tests, you will want them to run each time you make an update to your code. However, manually triggering tests can be time-consuming and prone to errors. Instead, you can configure a CircleCI CI/CD pipeline to automatically run your tests after every change.

Setting up Git and pushing to CircleCI

Note: If you have already cloned the project repository, you can skip this part of the tutorial. I have added the steps here if you want to learn how to set up your own project.

To set up CircleCI, initialize a Git repository in the project by running the following command:

git init

Next, create a .gitignore file in the root directory. Inside the file add node_modules to keep npm-generated modules from being added to your remote repository. Then, add a commit and push your project to GitHub.

Setting up CircleCI

Create a .circleci directory in your root directory and then add a config.yml file. The config file holds the CircleCI configuration for every project. Execute your k6 tests using the CircleCI k6 orb in this configuration:

version: 2.1

orbs:

k6io: k6io/test@1.1.0

workflows:

load_test:

jobs:

- k6io/test:

script: create-todo-http-request.js

Using third-party orbs

CircleCI orbs are reusable packages of YAML configurations that condense code into a single line. To allow the use of third-party orbs like python@1.2, you may need to:

- Enable organization settings if you are the administrator, or

- Request permission from your organization’s CircleCI admin.

Next, log in to CircleCI and go to the Projects dashboard. You can choose the repository that you want to set up from a list of all the GitHub repositories associated with your GitHub username or your organization.

The project for this tutorial is named performance-testing-with-k6. On the Projects dashboard, select the option to set up the project you want. Enter the name of the branch where your code is housed and click Set Up Project.

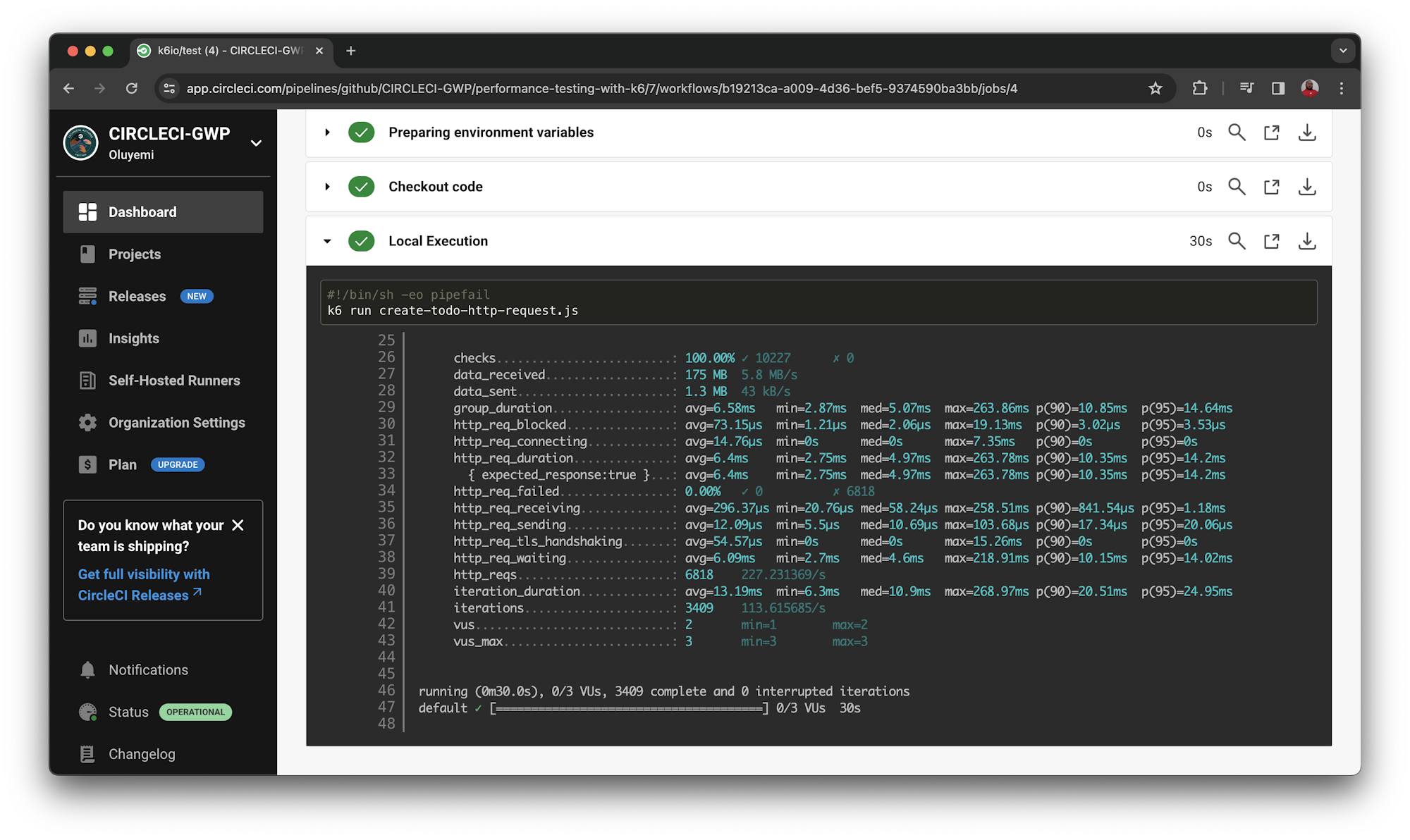

After initializing the build, your pipeline will build successfully.

Now, on further investigation, you can verify that running your performance tests in k6 was successful, and they have been integrated into CircleCI. Great work!

Now it is time to add metrics to measure the execution time of each endpoint.

Evaluating API request time

From the previous performance tests, it is clear that the test structure is less important than how the system reacts to the testing loads at any particular time. k6 comes with a metrics feature called Trend that lets you customize metrics for your console and cloud outputs. You can use Trend to find out the specific time of every request made to an endpoint by customizing how the timings will be defined. Here is an example code block:

import { Trend } from 'k6/metrics';

const uptimeTrendCheck = new Trend('duration');

const todoCreationTrend = new Trend('duration');

export let options = {

stages: [

{ duration: '0.5m', target: 3 }, // simulate ramp-up of traffic from 0 to 3Vus

],

};

export default function () {

group('API uptime check', () => {

const response = http.get('https://todo-barkend-2adf9b3c79d3.herokuapp.com/todos/');

uptimeTrendCheck.add(response.timings.duration);

check(response, {

"status code should be 200": res => res.status === 200,

});

});

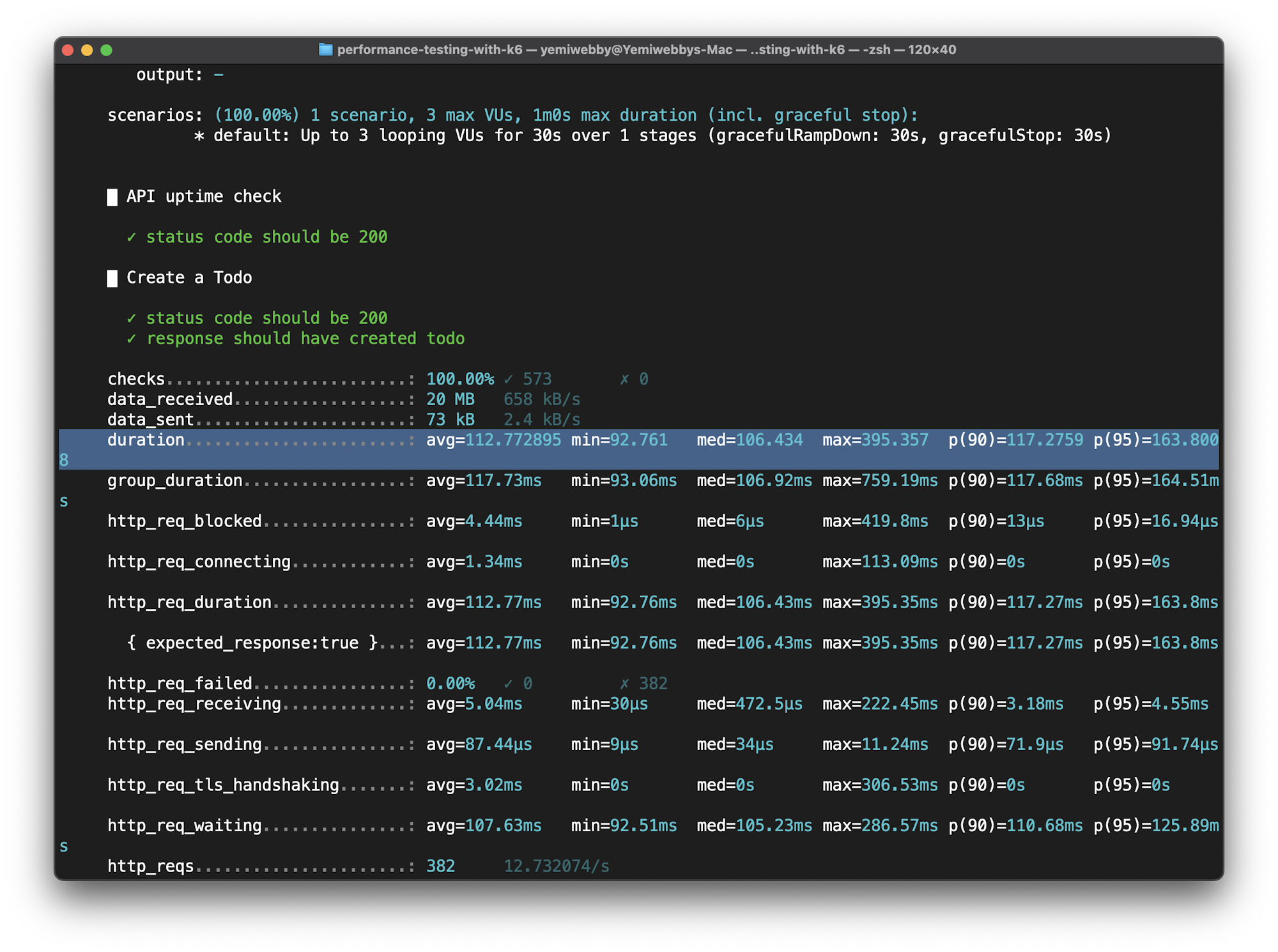

To implement k6 Trend, import it from k6/metrics, then define each trend you want. For this part of the tutorial, you only need to check the response time for the API when doing an uptime check, or when creating a new todo. Once the trends are created, navigate to the specific tests and embed the data you need to the declared trends.

Execute your todo creation performance test file using the command:

k6 run create-todo-http-request.js

Check the console response. You can find this test file in the root directory of the cloned repository. Once this passes locally, commit and push the changes to GitHub.

Once your tests finish executing, you can review the descriptions of the trends that you added in the previous step. Each has the timings for the individual requests, including the average, maximum, and minimum execution times and their execution percentiles.

Conclusion

This tutorial introduced you to what k6 is and how to use it to run performance tests. You went through the process of creating a simple k6 test for creating a todo list item to be run against a Heroku server. I also showed you how to interpret k6 performance tests results in the command line terminal, and using the cloud dashboard. Lastly, we explored using custom metrics and k6 Trends.

I encourage you to share this tutorial with your team, and continue to expand on what you have learned.

I hope you enjoyed this tutorial as much as I did creating it!

Waweru Mwaura is a software engineer and a life-long learner who specializes in quality engineering. He is an author at Packt and enjoys reading about engineering, finance, and technology. You can read more about him on his web profile.